The property rights approach to moral uncertainty

This report was produced as part of the Happier Lives Institute’s 2022 Summer Research Fellowship1Contact: harry.lloyd@yale.edu. Any critical comments on this working paper would always be gratefully received.

1. Introduction

Distribution: Imagine that some agent J is devoting her life to ‘earning to give’: J is pursuing a lucrative career in investment banking and plans to donate most of her lifetime earnings to charity. According to the moral theory Thealth in which J has 60% credence, by far and away the best thing for her to do with her earnings is to donate them to global health charities, and the next best thing is to donate them to charities that benefit future generations by fighting climate change. On the other hand, according to the moral theory Tfuture in which J has 40% credence, by far and away the best thing for her to do with her earnings is to donate them to benefitting future generations, and the next best thing is to donate them to global health charities.2J knows exactly what will happen if she donates her money to global health charities. Likewise, she knows exactly what will happen if she donates her money to charities that benefit future generations by fighting climate change. Thealth and Tfuture are competing moral theories, rather than competing empirical theories. A moral theory is a maximally consistent conjunction of propositions about moral facts. For sake of simplicity, I assume in this paper that all decision makers are idealized Bayesian reasoners who have credences over such conjunctions (cf. Cohen, Nissan-Rozen and Maril forthcoming). On all other issues, Thealth and Tfuture are in total agreement: for instance, they agree on where J should work, what she should eat, and what kind of friend she should be. They only disagree about which charity J should donate to. Finally, neither Thealth nor Tfuture is risk loving: each theory implies that an $x donation to a charity that the theory approves of is at least no worse than a risky lottery over donations to that charity whose expected donation is $x. In light of her moral uncertainty, what is it appropriate for J to do with her earnings?3Greaves and Cotton-Barratt (2019, §7.2) discuss a similar case.

According to one prima facie plausible proposal, it is appropriate for J to donate 60% of her earnings to global health charities and 40% of them to benefitting future generations – call this response Proportionality. Despite Proportionality’s considerable intuitive appeal, none of the theories of appropriateness under moral uncertainty thus far proposed in the literature support this simple response to Distribution.

In this paper, I propose and defend a Property Rights Theory (henceforth: PRT) of appropriateness under moral uncertainty, which supports Proportionality in Distribution.4In real-world investment decisions, many individuals seem to assume that dividing their savings equally between all of the available options is a sensible response to risk (inter alia Benartzi and Thaler, 2001). Furthermore, in at least some experimental settings, many people divide their resources Proportionally in response to empirical uncertainty (Loomes, 1991). However, on further reflection these choices look more like biases or heuristics than like rational responses to empirical uncertainty. For instance, investors whose financial provider happens to offer four funds in bonds and only one fund in stocks will often invest four-fifths of their savings into bonds and only one fifth into stocks, whereas otherwise similar investors whose financial provider happens to offer four funds in stocks and only one fund in bonds will often invest four-fifths of their savings into stocks and only one fifth into bonds. This is prima facie irrational.

One might worry that the Proportional response to Distribution likewise reflects an irrational bias or heuristic (I thank Martin Vaeth for suggesting this objection.) In response, I report that my personal intuitions in favour of the Proportional response to Distribution are far more robust to reflection than any intuitions that I have in favour of Proportional responses to empirical uncertainty. Moreover, I argue in §4.4 below that normative uncertainty differs in several important respects from empirical uncertainty. An irrational response to empirical uncertainty might be a perfectly rational response to normative uncertainty (and vice versa). In §2.1, I introduce the notion of appropriateness. In §2.2, I introduce several of the theories of appropriateness that have been proposed thus far in the literature. In §2.3, I show that these theories fail to support Proportionality. In §§3.1-3.3, I introduce PRT and I demonstrate that it supports Proportionality in Distribution. In §§3.4-3.9, I discuss the details. In §3.10, I extend my characterisation of PRT to cover cases where an agent faces a choice between discrete options, as opposed to resource distribution cases like Distribution.5Choices between discrete options have thus far dominated the literature on moral uncertainty. In §4, I argue that PRT compares favourably to the alternatives introduced in §2.2. In §5, I conclude.

2. Alternatives to the Property Rights Theory

2.1 Appropriateness

Suppose I believe that animal suffering does not matter morally, but also that I am somewhat uncertain about this. If animal suffering matters morally, then I am required to eat tofu rather than foie gras, whereas if animal suffering does not matter morally, then I am permitted to eat either tofu or foie gras. In view of my moral uncertainty, there is plausibly some sense in which it is inappropriate for me to eat the foie gras rather than the tofu.6MacAskill, Bykvist and Ord 2020, p. 15.

In this paper, I shall discuss competing normative theories about which actions are appropriate under moral uncertainty. I shall not discuss the metaethical question of how we should understand this intuitive notion of ‘appropriateness’. Instead, I shall tentatively adopt MacAskill, Bykvist and Ord’s suggestion that to call some choice ‘appropriate’ is to say that this choice would be rational for the decision maker, if she were “morally conscientious”.7MacAskill, Bykvist and Ord 2020, p. 20; see also Bykvist 2014; Sepielli 2014. Morally conscientious agents “care about doing right and refraining from doing wrong” and “also care more about serious wrong-doings than minor wrong-doings.”8MacAskill, Bykvist and Ord 2020, p. 20. MacAskill, Bykvist and Ord “take this to be a precisification of the ordinary notion of conscientiousness, loosely defined as ‘governed by one’s inner sense of what is right,’ or ‘conforming to the dictates of conscience.’”9MacAskill, Bykvist and Ord 2020, p. 20.

2.2 Theories of appropriateness

The first theory of appropriateness that I discuss in this section is My Favourite Theory (henceforth: ‘MFT’).10Gracely 1996; Gustafsson and Torpman 2014. To a first approximation, MFT says that:

an action A is appropriate in some choice situation χ iff according to the theory that the decision maker has most credence in, A is maximally choiceworthy in χ.11Gustafsson and Torpman’s (2014) modifications need not concern us here.

The choiceworthiness of an action in some choice situation according to some moral theory is the strength of the decision maker’s moral reasons in favour of performing that action according to that moral theory.12MacAskill, Bykvist and Ord 2020, p. 4. I actually think that MFT is better defined in terms of a slightly different concept, which I call ‘decisionworthiness’ (see [redacted]). We can ignore this complication here. In other words, A is more choiceworthy than B according to some moral theory T iff the decision maker’s moral reasons in favour of A are stronger than her moral reasons in favour of B according to T.

MFT arguably has implausible implications in certain cases where the decision maker has strictly positive credence in (henceforth: ‘entertains’) a large number of theories.13Relatedly, MacAskill, Bykvist and Ord (2020, §2.I) have argued that MFT suffers from a ‘problem of theory individuation’ (see also [redacted]). Suppose that the theories T1, …, T98 all agree that action A is the best option available to the decision maker in some choice situation and that action B is the worst. However, theory T99 says that B is the best option and A is the worst. The decision maker has credence 0.01 in each of the theories T1, …, T98 and credence 0.02 in T99. According to MFT, action B is the only appropriate option for that decision maker in this situation.

One way to avoid this result is to adopt My Favourite Option (henceforth: ‘MFO’). According to MFO:

an action A is appropriate in some situation χ iff for any other option B that is available to the decision maker in χ, the decision maker’s credence in A being maximally choiceworthy in χ is greater than or equal to her credence in B being maximally choiceworthy in χ.14As with MFT (see n. 12 above), I actually think that MFO is better defined in terms of a slightly different concept to choiceworthiness, which I call ‘decisionworthiness’ (see [redacted]). Again, we can ignore this complication here.

In the case that I use to critique MFT, the decision maker has credence 0.98 in A being maximally choiceworthy, and credence 0.02 in B being maximally choiceworthy. Hence, MFO implies that option A is uniquely appropriate.

Both MFT and MFO arguably have implausible implications in so-called ‘Jackson cases’.15Inspired by Jackson 1991.

Jackson: In some situation, suppose that according to T1: A is the best option; B is almost as good; and C is terrible. According to T2: C is the best option; B is almost as good; and A is terrible. Furthermore, suppose that the decision maker has credence 0.51 in T1, and 0.49 in T2 (see Figure 1).

Figure 1: Jackson cases

According to both MFT and MFO, it is uniquely appropriate for the decision maker to choose option A in Jackson. Yet many of us intuit, to the contrary, that it is uniquely appropriate for the decision maker to ‘hedge her bets’ by choosing option B. After all, the decision maker is certain that B is near-maximally choiceworthy. By contrast, she has credence 0.49 in option A being terrible. Hence option B seems more appropriate than option A.16Critics have also outlined money-pump objections to MFO (Gustafsson and Torpman 2014, §2; MacAskill and Ord 2020, §4).

T1 and T2 are intertheoretically unit-comparable iff for any actions A, B, C, and D, there exists some k for which it is true and meaningful to say that the difference in choiceworthiness between A and B according to T1 is k times the size of the difference in choiceworthiness between C and D according to T2.17MacAskill, Bykvist and Ord 2020, p. 7.. In cases where T1 and T2 are intertheoretically unit-comparable, one option for honouring our intuitions about Jackson is to Maximise Expected Choiceworthiness (henceforth: ‘MEC’).18Oddie 1994; Lockhart 2000; Sepielli 2009; 2010; Wedgwood 2013; 2017; MacAskill 2014; MacAskill and Ord 2020; MacAskill, Bykvist and Ord 2020. According to MEC:

an action is appropriate in some situation iff it maximises intertheoretic expected choiceworthiness.19What about cases where choiceworthiness is interval-scale measurable but not inter-theoretically unit-comparable? Perhaps the simplest proposal open to advocates of MEC here is to suggest that one should Maximise Expected Normalized Choiceworthiness (MacAskill, Cotton-Barratt and Ord 2020; MacAskill, Bykvist and Ord 2020, chapter 4; Ecoffet and Lehman 2021). For objections, see Pivato 2022; Gustafsson forthcoming; [redacted].

Secondly, what about cases where one or more of the entertained theories ranks options ordinally rather than cardinally? Perhaps the simplest proposal open to advocates of MEC here is to suggest that one should somehow cardinalize the ordinal theories. After that, one can simply Maximise Expected Normalized Choiceworthiness (MacAskill, Bykvist and Ord 2020, pp. 108-10). For criticisms of and two alternatives to this theory-by-theory cardinalization approach, see Tarsney 2021; [redacted]. Cf. also Tarsney 2019a; Carr 2022; Pivato 2022; Gustafsson forthcoming.

The intertheoretic expected choiceworthiness of some action is a weighted average of its choiceworthiness according to each of the entertained theories, where each theory’s weight is equal to the decision maker’s credence in that theory.20Technical note: this definition of expected choiceworthiness is normatively ex post but empirically ex ante – one of four combinatorically possible definitions of expected choiceworthiness. If one restricts MEC to cases where all of the entertained theories have von Neumann-Morgenstern choiceworthiness functions (cf. MacAskill, Bykvist and Ord 2020, pp. 107-8), then these four possible definitions are extensionally equivalent (Dietrich and Jabarian, forthcoming). This complication need not concern us here. Suppose, for instance, that in Jackson, T1 and T2 are intertheoretically unit-comparable, and their choiceworthiness schedules are as follows:

Table 1a

| Choiceworthiness | T1: 0.51 credence | T2: 0.49 credence |

| A | 10 | –10 |

| B | 9 | 9 |

| C | –10 | 10 |

Hence, A’s expected choiceworthiness is (0.51 x 10) + (0.49 x -10) = 0.2, B’s expected choiceworthiness is (0.51 x 9) + (0.49 x 9) = 9, and C’s expected choiceworthiness is (0.49 x 10) + (0.51 x -10) = -0.2.

Table 1b

| Choiceworthiness | T1: 0.51 credence | T2: 0.49 credence | Intertheoretic expectation |

| A | 10 | –10 | 0.2 |

| B | 9 | 9 | 9 |

| C | –10 | 10 | –0.2 |

Thus, B uniquely maximises expected choiceworthiness in this situation.

The final theory of appropriateness that I introduce in this section is Greaves and Cotton-Barratt’s Nash Bargaining Theory (henceforth: ‘NBT’).21Greaves and Cotton-Barratt 2019. NBT is only applicable in cases where all of the entertained theories’ choiceworthiness orderings can be represented by von Neumann-Morgenstern (henceforth: ‘vNM’) choiceworthiness functions. A choiceworthiness function is vNM iff the ex-ante choiceworthiness of bringing about any lottery L over two or more possible outcomes is equal to a weighted average of the choiceworthinesses of risklessly bringing about each of L’s possible outcomes, where each possible outcome’s weight in this average is its probability of occurring under L. For instance, suppose that according to some vNM representation of T’s choiceworthiness ordering, A and B have choiceworthiness values 8 and 4 respectively. Then the ex-ante choiceworthiness of a fifty-fifty lottery over A and B must be (0.5 x 8) + (0.5 x 4) = 6.

In cases where all of the entertained theories are vNM representable, NBT says that one should model the set of entertained theories as a set of bargainers. In each choice situation, the bargainers will bargain with each other over which action is to be performed. The greater the decision maker’s credence in some entertained theory, the greater the ‘leverage’ with which its bargainer will enter the bargaining problem. A popular solution concept for such bargaining problems is the Nash Bargaining Solution; in particular, Greaves and Cotton-Barratt use the Asymmetric Nash Bargaining Solution, whose technicalities I relegate to the appendix. According to Greaves and Cotton-Barratt’s NBT:

an action is appropriate in some situation iff it is an Asymmetric Nash Bargaining Solution of the corresponding bargaining problem.

Greaves and Cotton-Barratt themselves “tentatively” reject this theory in favour of MEC.22One problem with NBT is the problem of scenario individuation: how choice situations are individuated makes a big difference to the theory’s appropriateness judgements. Greaves and Cotton-Barratt (2019, §6) call this the “problem of small worlds”. To overcome this problem, one would have to develop a principled theory of scenario individuation (cf. [redacted]).

My statement of NBT is somewhat ambiguous, since it does not specify how to construct the bargaining problems ‘corresponding’ to any particular choice situations. In particular, I have not specified how to select the disagreement point for these bargaining problems. The ‘disagreement point’ in any bargaining problem is the outcome that the bargainers believe will eventuate if they do not together settle on a negotiated agreement. Greaves and Cotton-Barratt suggest that in the application of bargaining theory to moral uncertainty, “it is unclear how the disagreement point should be identified. The talk of different theories ‘bargaining’ with one another is only metaphorical, and there is not obviously any empirical fact of the matter regarding ‘what would happen in the absence of agreement.’”23Greaves and Cotton-Barratt 2019, §3.1. According to Greaves and Cotton-Barratt, NBT theorists should simply “select some disagreement point such that bargaining theory with that choice of disagreement point supplies a satisfactory” theory of appropriateness.24Greaves and Cotton-Barratt 2019, §3.1. I resist this suggestion in n. 39 below.

Greaves and Cotton-Barratt do not decide in favour of any particular disagreement point. Instead, “as far as possible” they “proceed in a way that is independent of how the disagreement point is identified.”25Greaves and Cotton-Barratt 2019, §3.1.

Possible disagreement points mentioned by Greaves and Cotton-Barratt include:

- Random dictator: a lottery is held, wherein each bargainer’s chance of winning is equal to the decision maker’s credence in the moral theory represented by that bargainer. The lottery winner gets to decide how the decision maker will act in the current choice situation.

- Anti-utopia: an outcome whose choiceworthiness according to any given moral theory is the minimum choiceworthiness possible in this choice situation according to that moral theory.

- Do nothing: the outcome that would eventuate if the decision maker did nothing.

2.3 Proportionality

So much for the finer points of NBT. I now show that MFT, MFO, MEC, and NBT do not support Proportionality in Distribution. First, I consider MFT and MFO. Recall that agent J in Distribution has 60% credence in Thealth and thus 60% credence in it being uniquely maximally choiceworthy to donate to global health charities in Distribution. Hence, both MFT and MFO imply that it is most appropriate, contra Proportionality, for J to donate all of her money to global health charities. This is an intuitive disadvantage of these theories.

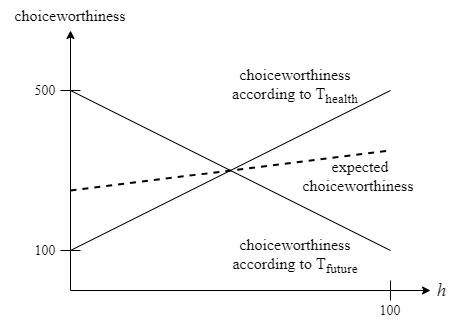

In order to apply MEC to Distribution, let us suppose that the choiceworthiness evaluations of Thealth and Tfuture are intertheoretically unit-comparable. For sake of concreteness, suppose that according to Thealth, one dollar donated to global health charities does five times as much good as one dollar spent on benefitting future generations, and vice versa according to Tfuture. In particular, if h% of J’s money is spent on global health, and (100–h)% is spent on benefitting future generations, then choiceworthiness according to Thealth is 5h + (100 – h) = 100 + 4h, whereas choiceworthiness according to Tfuture is h + 5(100 – h) = 500 – 4h. Intertheoretically expected choiceworthiness as a function of h is therefore 0.6(100 +4h) + 0.4(500 – 4h) = 260 + 0.8h

Figure 2: Choiceworthiness as a function of h

Expected choiceworthiness is clearly maximised when h is as large as possible. Hence, under these assumptions, MEC implies that it is most appropriate, contra Proportionality, for J to donate all of her money to global health charities. In general, MEC only ever implies Proportionality as a matter of coincidence.

Greaves and Cotton-Barrat’s NBT comes much closer than MFT, MFO, and MEC to supporting Proportionality. Indeed, if (as in Figure 2) Thealth and Tfuture can both be represented by vNM choiceworthiness functions that are linear in h, then the random dictator and anti-utopia versions of NBT will both support Proportionality as an appropriate response to Distribution.26Greaves and Cotton-Barratt 2019, §7.2. A vNM choiceworthiness function linear in h corresponds to risk neutrality with respect to h. In other words: the choiceworthiness of a lottery over two or more different values of h is always equal to the choiceworthiness of the expected value of h under that lottery.

Under the random dictator lottery in Distribution, h = 100 is chosen with 60% probability, and h = 0 is chosen with 40% probability, yielding 60 as the expected value of h. Hence, if Thealth and Tfuture can both be represented by vNM choiceworthiness functions that are linear in h, then an outcome is no less choiceworthy than the random dictator point according to both Thealth and Tfuture iff that outcome is no less choiceworthy than h = 60 according to both Thealth and Tfuture. Of course, Thealth prefers h to be as large as possible, whereas Tfuture prefers h to be as small as possible. Hence, every expected value of h other than 60 is less choiceworthy than the random dictator point according to either Thealth or Tfuture. For this reason, if Thealth and Tfuture can both be represented by vNM choiceworthiness functions that are linear in h, then the random dictator version of NBT supports Proportionality as an appropriate response to Distribution.27However, note that it does not support Proportionality as the uniquely appropriate response to Distribution. On the contrary, according to this version of NBT, any lottery having expectation h = 60 is also an appropriate response to Distribution. Greaves and Cotton-Barratt (2019, §7.2) regard this result as implausible, although I am not so sure that I share this intuition.

Under this linearity condition, the anti-utopia version of NBT also supports Proportionality in Distribution.28This follows from Greaves and Cotton-Barratt’s (2019, §4.1) “Proposition 1.” For want of space, I prescind from explaining here why this result obtains.

In summary: if Thealth and Tfuture can both be represented by vNM choiceworthiness functions that are linear in h, then at least two of Greaves and Cotton-Barratt’s versions of NBT support Proportionality in Distribution.

However, in a great many cases where one or both of the vNM choiceworthiness functions for Thealth or Tfuture are non-linear in h, both the random dictator and the anti-utopia versions of NBT imply that Proportionality is inappropriate in Distribution. For instance, suppose that Thealth is risk averse with respect to h, so that the choiceworthiness ordering of Thealth can be represented by the concave vNM choiceworthiness function √(h/100). In other words: according to Thealth, the choiceworthiness of a lottery over two or more different values of h is always lower than the choiceworthiness of the expected value of h under that lottery. By contrast, suppose that Tfuture is risk neutral, so that its choiceworthiness ordering can be represented by the vNM choiceworthiness function 1 – (h/100) (see Figure 3).

Figure 3: Choiceworthiness as a function of h

Recall that in Distribution, under the random dictator lottery h = 100 is chosen with 60% probability, and h = 0 is chosen with 40% probability, yielding 60 as the expected value of h. Since Tfuture is risk neutral with respect to h, an outcome is no less choiceworthy than the random dictator point according to Tfuture iff in expectation, h ≤ 60. By contrast, because Thealth is somewhat risk averse with respect to h, certain low-risk outcomes are no less choiceworthy than the random dictator point according to Thealth even though these outcomes yield expected values of h strictly less than 60. For instance, according to Thealth, h = 36 with certainty is no less choiceworthy than the random dictator point, since √(36/100) = 0.6 = (0.6 x 1) + (0.4 x 0).

Thus, under a random dictator disagreement point, bargainers are disadvantaged to the extent that they are risk averse.29Kihlstrom, Roth and Schmeidler 1981. A risk-averse bargainer is willing to accept a less-than Proportional share of the available resources in order to avoid the risk of getting nothing if no agreement is reached. In the particular example under discussion, the random dictator version of NBT implies, contra Proportionality, that it is appropriate for J to spend only half of her money on global health charities. The anti-utopia version of NBT also has this implication.30This follows from Greaves and Cotton-Barratt’s (2019, §4.1) “Proposition 1.” I can see no reason why appropriateness in Distribution should depend in this way on each theory’s degree of risk aversion. Bear in mind here that vNM choiceworthiness functions need not be ‘interval-scale’ measures of choiceworthiness.31Some choiceworthiness function CW( ⋅ ) is an interval-scale measure of choiceworthiness according to some moral theory T iff for any options A, B, C, and D:

(1) CW(A) > CW(B) iff A is more choiceworthy than B according to T; and

(2) CW(A) – CW(B) = k × (CW(C) – CW(D)) iff the difference in choiceworthiness between A and B is k times the difference in choiceworthiness between C and D according to T.

vNM choiceworthiness functions need not satisfy (2).

Another important disadvantage of NBT is that in cases where the choiceworthiness rankings of either Thealth or Tfuture are non-vNM, NBT is simply inapplicable.32Greaves and Cotton-Barratt (2019, n. 5) explicitly “assume that all moral theories obey the axioms of expected utility theory, in their treatment of empirical uncertainty [i.e.: are vNM].” They admit that “not all moral theories have a structure that is consistent with this assumption,” and that “this is a little awkward, since even if such moral theories seem implausible at the first-order level, ideally we would like our [theories of appropriateness] to apply to agents who have nonzero credence in such theories.” However, Greaves and Cotton-Barratt disclaim that they “do not know how to adapt bargaining theory so that this assumption is not required.”

Explaining a little further: the (Asymmetric) Nash Bargaining Solution is only invariant up to affine transformations of the bargainers’ utility functions. Hence, for NBT to be applicable, (1) the class of affine transformations of the chosen functional representations of the entertained theories’ choiceworthiness orderings must be somehow privileged over any other possible representations of those orderings. Furthermore, (2) the feasible set must be convex in order for the (Asymmetric) Nash Bargaining Solution to satisfy its characteristic axioms. In many cases where one or more of the bargainers have non-vNM preferences, conditions (1) and/or (2) are violated. Restricting the applicability of the (Asymmetric) Nash Bargaining Solution to cases where all of the bargainers have vNM preferences is the generally accepted way to ensure that conditions (1) and (2) are both satisfied. For instance, suppose that Thealth or Tfuture is a risk avoidant moral theory, according to which the ex-ante choiceworthiness of bringing about some risky lottery L over two or more possible outcomes is more sensitive to the choiceworthiness of risklessly bringing about L’s worst possible outcome than it is in a vNM-representable moral theory.33For a defence of this kind of risk weighting, see Buchak 2013. (The difference between vNM-representable and risk-avoidant moral theories is illustrated in Figure 4) NBT is ex hypothesi inapplicable to a decision maker who has any positive credence in any risk-avoidant moral theories. This is a significant disadvantage of NBT.

Figure 4: vNM-representable and risk-avoidant moral theories

Sergio Tenenbaum has also recently argued that the best version of deontology need not be vNM. According to Tenenbaum, “deontological rules, prohibitions, and permissions apply primarily to intentional acts; [and] risk changes the nature of an [intentional] act, not the probability that the same act will be performed,” thus undercutting an important motivation for vNM representability.34Tenenbaum 2017, p. 675. Once again, NBT is inapplicable to a decision maker – like Sergio Tenenbaum – who has positive credence in any non-vNM versions of deontology. This is a significant disadvantage of NBT.

3. The Property Rights Theory

3.1 The basic idea

The idea behind NBT is that the decision maker’s entertained moral theories should be modelled as bargainers, who in each choice situation bargain with each other over which action will be performed. Relatedly, the idea behind certain voting-inspired theories of appropriateness – such as MacAskill’s Borda Count Theory35MacAskill 2016. The Borda Count Theory is designed to handle first-order moral theories that have merely ordinal choiceworthiness functions. See also Tarsney 2019a; MacAskill, Bykvist and Ord 2020, chapter 3; Ecoffet and Lehman 2021; [redacted]. – is that the decision maker’s entertained moral theories should be modelled as voters, who in each choice situation hold a vote to determine which action will be performed.36Newberry and Ord (2021, §4) suggest that MFT, MFO, and MEC can also be understood in these terms; MacAskill (2016, pp. 979-80) says the same thing about MFT and MFO; see also Ecoffet and Lehman 2021.

The idea behind PRT is that what is appropriate in each choice situation χ is determined by a certain economic model of χ. Each entertained moral theory Ti is modelled as a property-owning economic agent Ai, who has open to her all of the options open to the decision maker. In a resource distribution choice situation like Distribution, each theory-agent Ai is initially endowed with a share of the decision maker’s resources proportional to the decision maker’s credence in the corresponding theory Ti. Ai initially owns these resources, and can use them as she sees fit (this is how the ‘Property Rights’ Theory gets its name). For instance, Ai can spend her resources in any of the ways open to the decision maker. Theory-agents can also make contracts with each other governing how they will use their resources. Ai’s preference structure over the space of possible outcomes of the model is identical to Ti’s choiceworthiness structure over the corresponding space of actions open to the decision maker.37Since each theory-agent’s preferences are over the space of equilibrium outcomes, each theory-agent cares not just about how she uses her own resources, but also about how the other theory-agents use theirs. Finally, Ai is certain that Ti is true. An option is appropriate in χ iff it is the aggregate of all of the actions performed by the decision maker’s theory-agents in an equilibrium of the economic model of χ.38Some of these ideas have previously been adumbrated in blog posts by Holden Karnofsky (2016; 2018) and Michael Plant (2022). (This is a case of multiple discovery: I read Karnofsky and Plant’s blog posts after completing the first draft of this paper.)

PRT can be understood as a particular kind of bargaining theory, since economic agents interacting with each other in an exchange economy are eo ipso bargainers. In this respect, NBT and PRT have something in common. On the other hand, there are several fundamental differences between NBT and PRT. Footnote 39 provides a résumé of those differences.39There are at least seven differences between PRT and Greaves and Cotton-Barratt’s NBT:

(1) As I explain in §3.2, the motivating ideas are different. Or at least: PRT’s motivating idea is more specific than NBT’s. Free markets always involve some element of bargaining, but not all bargaining problems take place in free markets. For instance, peace treaty negotiations can be modelled as bargaining problems, even though they are clearly not examples of market interactions. Unlike NBT, PRT is specifically inspired by the market system.

(2) As I explain in §3.5, PRT uses Position Maximin rather than the Asymmetric Nash Bargaining Solution to solve the bargaining problem between theory-agents. For this reason, PRT but not NBT is applicable in cases where one or more of the entertained theories is non-vNM.

(3) As I explain in §3.7, PRT has ‘broader scope’ than NBT, and hence avoids the problem of scenario individuation (cf. n. 22 above).

(4) Relatedly, the revisions to PRT introduced in §3.9 have no parallels in Greaves and Cotton-Barratt’s NBT.

(5) The ‘disagreement point’ used in PRT’s model of resource-distribution choice situations is different from any of the disagreement points proposed by Greaves and Cotton-Barratt (2019, §3.1).

(6) Relatedly, my approach to choosing a disagreement point is very different from Greaves and Cotton-Barratt’s. According to Greaves and Cotton-Barratt (2019, §3.1), one should simply “select some disagreement point such that NBT with that choice of disagreement point supplies a satisfactory” theory of appropriateness. By contrast, PRT’s disagreement point follows from the motivating idea of PRT. In resource distribution cases like Distribution, each theory-agent is endowed with her fair share of the decision maker’s resources. And as I explain in §3.10, in choices between two or more discrete options, each theory-agent is endowed with her fair chance to win the lottery to decide which action is to be performed.

(7) Implicit in Greaves and Cotton-Barratt’s presentation of NBT is the assumption that each theory-agent’s beliefs about what would happen if the decision maker were to perform any action are identical to the decision maker’s own beliefs about this. PRT largely shares this assumption; but it also departs from it in one crucial respect, as I explain in §4.2. This departure allows PRT to provide an account of the value of moral information (which Greaves and Cotton-Barratt do not discuss).

3.2 Motivation

The motivating idea behind PRT is that the problem of decision making under moral uncertainty is closely analogous to the problem of incompossible preferences fundamental to microeconomics. One part of this problem is the problem of scarcity, which has been faced by every society in history. The problem of scarcity arises because there are too few resources available for every individual to be able to satisfy all of their desires for resources. Hence, any society has to decide how its finite resources will be allocated, with every allocation profile having an opportunity cost. A system of property rights and free markets is an elegant way of resolving this problem of scarcity. The initial distribution of property rights determines who is initially entitled to each unit of resources. Property owners can then trade resources with each other on the free market. Under idealised conditions of perfect information and perfect rationality, these trades will always be Pareto improvements: each agent will value what she is buying more than she values what she is selling. This is a desirable feature of the market response to the problem of scarcity.

The problem of scarcity is not the only element of the problem of incompossible preferences. Another element concerns incompossible preferences about individual behaviour. Suppose, for instance, that I know some embarrassing things about your personal life. I want to gossip to others about them, and so does the National Enquirer; but you would prefer for me to keep quiet. Logically necessarily, only one of these two preferences can be satisfied. Once again, a market system can determine which one it will be. Supposing that the law does not classify my gossip as defamatory, I am initially endowed with the right to share this gossip with whomsoever I choose. However, I also have the option to sell you that right, in the form of a nondisclosure agreement (you would pay me to sign a contract promising not to share my gossip with anyone). Under conditions of perfect information and perfect rationality, these kinds of trades are always Pareto improvements. I will sign the nondisclosure agreement only if I value the money you will pay me more than I value the right to share my gossip with the National Enquirer. Once again, this is a desirable feature of the market response to incompossible preferences.

Speaking metaphorically, being morally uncertain is a bit like being pulled in several different directions by several different parts of oneself. For instance, perhaps the dominant part of me is utilitarian, and so pulls me in the direction of maximising total utility. But perhaps another part of me is Kantian, and so pulls me in the direction of acting only according to the maxims that I can will to become universal laws. Each part of oneself has its own preferences over how one should act. In my experience, this way of describing things rings true to the particular phenomenology of moral uncertainty. The challenge for a theory of appropriateness is to derive a coherent decision rule from this collection of competing preferences.

Decision making under moral uncertainty is closely analogous to the economic problem of incompossible preferences. First, the problem of moral uncertainty is in part a problem of resource scarcity.40Cf. Lockhart 2000, chapter 8. If J had unlimited resources in a case like Distribution, then she could afford to donate unlimited amounts of money to both Health and Tfuture’s favourite charities – enough for both charities to have more than enough money than they would know what to do with. Without resource scarcity, moral uncertainty in Distribution is unproblematic. Alternatively, suppose that some transplant surgeon has some credence in both utilitarianism and deontology. This surgeon has the option to murder one of her patients in order to harvest and transplant the patient’s organs, thereby saving five other people. If there is a shortage of organs for transplant, then the surgeon faces the problem of decision making under moral uncertainty. However, if the surgeon already has unlimited access to organs for transplant, then utilitarianism and deontology agree that she should not murder the patient. Once again, without resource scarcity moral uncertainty is unproblematic.

However, resource scarcity is not the only element of the problem of decision making under moral uncertainty. Another element concerns incompossible evaluations of individual behaviour. In Jackson, for instance, T1 claims that the agent should perform option A, whereas T2 claims that the agent should perform option C. Moreover, it might well be logically necessary that at most one of these two preferences can be satisfied. In that case, no amount of extra resources in Jackson could dissolve the problem of decision making under moral uncertainty. This case would then be analogous to interpersonal cases of incompossible preferences about individual behaviour such as the gossip case discussed above.41On PRT’s response to Jackson cases, see §3.10 below.

To summarise: the problem of decision making under moral uncertainty is closely analogous to the economic problem of incompossible preferences. One elegant solution to the economic problem is a system of property rights and free markets. Under idealised conditions, this system has certain attractive properties: the initial distribution of property rights can be arranged such that each agent receives her fair share; and then free trade ensures Pareto optimality. This motivates the suggestion that an analogous Property Rights approach to the problem of moral uncertainty is worthy of investigation.

3.3 Distribution

For a simple illustration of PRT, consider Distribution. PRT says that we should model Thealth and Tfuture as property-owning economic agents – Ahealth and Afuture respectively – whose preference structures over outcomes are simply the choiceworthiness structures of Thealth and Tfuture over the corresponding actions. Ahealth is initially assigned ownership of 60% of the decision maker’s philanthropic budget, and Afuture is initially assigned ownership of the remaining 40%. If Ahealth and Afuture wish, they can trade and make contracts with each other. They can also choose to spend their endowments in any of the ways open to J. In Distribution, as it happens, Ahealth and Afuture do not have anything to gain by trading or making contracts with each other. Ahealth just wants to donate all of her endowment to global health charities, and Afuture just wants to donate all of her endowment to climate change charities. Hence, according to PRT, it is appropriate for J to donate 60% of her earnings to global health charities, and 40% of her earnings to climate change charities. Proportionality is vindicated in Distribution.

3.4: Double Distribution

Distribution is a simple scenario in which a decision maker has to distribute a continuously divisible resource. I now consider a slightly more complicated resource distribution problem.

Double Distribution: Imagine that Kathleen is trying to decide what to do with her life. Just suppose, for sake of simplicity, that Kathleen only has two ethical decisions to make in her lifetime, and that she must make both of these decisions now. Kathleen’s first decision is what she should do with her career, and her second decision is how she should distribute her charitable donations. For instance, Kathleen might choose to work in global health, and to donate as much of her income as possible to animal rights charities. Suppose that Kathleen has positive credences chealth and cfuture = 1 – chealth in the moral theories Thealth and Tfuture respectively. Under her conditions of moral uncertainty, what is it most appropriate for Kathleen to do?

To apply PRT to this case, I begin by modelling Kathleen as having an initial wealth endowment W, and an initial labour endowment L. L is the amount of time that Kathleen can spend working during her lifetime (I assume, for sake of simplicity, that this value is known with certainty). W is Kathleen’s initial financial wealth, minus the amount of money that she would be required to have now in order to cover her necessary living costs for the remainder of her lifetime. For almost all young people in Kathleen’s position, W will be negative rather than positive. However, I begin by supposing that W is positive, and only later consider the case where W is negative.

As in Distribution, we model the theories Thealth and Tfuture as economic agents, Ahealth and Afuture, whose respective preference structures over outcomes are simply Thealth and Tfuture’s choiceworthiness structures over the corresponding actions. Ahealth will be initially assigned ownership of chealthW and chealthL, and Afuture will be initially assigned ownership of cfutureW and cfutureL. If Ahealth and Afuture wish, they can trade and make contracts with each other, and they can also choose to spend their endowments in any of the ways open to Kathleen. For instance, Afuture might use her labour endowment cfutureL working for a climate change research team. If this work pays wclimate an hour, then Afuture thereby increases her stock of wealth to cfutureW + wclimatecfutureL. Afuture might then donate this wealth to climate change charities.

However, suppose that according to the Tfuture worldview, what the world really needs in Kathleen’s lifetime is more climate change research being done by people like Kathleen. By contrast, according to the Thealth worldview, what the world really needs in Kathleen’s lifetime is more donations to global health charities. Under these suppositions, Ahealth and Afuture will almost certainly find it optimal to trade with one another. Ahealth might sell some of her labour endowment (say L* of it) to Afuture, in return for a monetary payment from Afuture of cfutureW plus Afuture‘s wages wclimate(cfutureL + L*) from working for the climate change research team.42I discuss how to determine the value of L* in §3.5 below. Under these assumptions, the equilibrium outcome might look something like this: Afuture will work cfutureL + L* hours in the climate change research team; Ahealth will work chealthL + L* hours as, say, a freelance software developer earning wtech per hour; and Ahealth will donate W + wclimate(cfutureL + L*) + wtech(chealthL + L*) to global health charities. Hence, according to PRT, it would be most appropriate for Kathleen to work cfutureL + L* hours in the climate change research team, and to work chealthL + L* hours as a freelance software developer, and to donate all of her wealth plus income to global health charities.43I have assumed here that wages are linear in hours worked. In the real world, this assumption is likely to be unrealistic: having two different careers is less efficient than having only one. Relaxing this assumption might, for instance, make it appropriate for Ahealth and Afuture to agree to jointly pursue a single career in software development, with Ahealth donating her income plus wealth to global health charities, and Afuture donating her income plus wealth to climate change charities.

Things can become even more complicated if we make alternative assumptions about the employment options open to Kathleen. Suppose, for instance, that it is impossible for Kathleen to work for only part of her career (i.e.: for anything less than L) in fields that the Tfuture worldview regards as morally valuable, such as climate change research. In that case, Afuture will inherit the same limitations. Afuture can only work in climate change research if Afuture can afford to buy all of Ahealth’s labour endowment from Ahealth. Ahealth, however, might prefer to keep her labour hours than to sell them to Afuture at a price of cfutureW + wclimateL, which is the maximum offer that Afuture can afford to make. In that case, Afuture will not be able to work in climate change research. Suppose that under these conditions, Afuture will, just like Ahealth, favour a plan of ‘earning to give.’ Afuture and Ahealth will both use their labour endowments working as freelance software developers, earning wtech per hour. Afuture will then donate cfutureW + wtechcfutureL = cfuture(W + wtechL) to climate change charities, and Afuture will donate chealthW + wtechchealthL = chealth(W + wtechL) to global health charities. Hence, according to PRT, the appropriate plan would be for Kathleen to work full-time as a software developer, donating cfuture of her wealth plus income to climate change charities, and the rest of it to global health charities.

Finally, suppose that W is negative. In that case, Ahealth and Afuture’s initial wealth endowments chealthW and cfutureW will likewise be negative. In other words: Ahealth and Afuture will initially be endowed with debts. Both Ahealth and Afuture will have to earn enough money – either through working, or through trading with each other – to pay off their initial debts before they can donate any money to charitable causes.

3.5 Trade

Suppose that Ahealth wants to sell her labour endowment, and that the minimum price Ahealth is prepared to accept is strictly less than the maximum price Afuture is willing to pay. In that case, we will need to invoke some normative solution concept to determine what the price will be. Since some moral theories are not representable by vNM choiceworthiness functions (see §2.3 above), we should adopt a solution concept that – unlike the Nash Bargaining Solution – does not require the agents to have vNM preferences.

I now suggest one such solution concept, that I call Position Maximin.44Position Maximin generalises the Imputational Compromise solution discussed by Kıbrıs and Sertel (2007) and Conley and Wilkie (2012). It is also closely related to Sprumont’s Rawlsian Arbitration (1993), Hurwicz and Sertel’s Kant-Rawls Compromise (1999), Brams and Kilgour’s Fallback Bargaining (2001), and Congar and Merlin’s Maximin Rule (2012). For an alternative approach to bargaining problems without vNM preferences, see Nicolò and Perea 2005. First, some terminology. An outcome X is Pareto optimal iff there does not exist any alternative outcome Y that every agent weakly prefers, and that at least one agent strictly prefers, to X.45An agent weakly prefers X over Y iff she thinks that X is at least as good as Y. An agent strictly prefers X over Y iff she thinks that X is better than Y. X is individually rational iff every agent weakly prefers X to the disagreement point (defined in §2.2 above). X is an imputation iff X is both Pareto optimal and individually rational.

Define an agent’s position-measured satisfaction with some imputation X as the fraction of imputations that the agent weakly prefers X over.46This definition assumes that there is a privileged interval-scale measurement for any resource that one might be endowed with, unique up to positive affine transformation. For instance, I assume that measuring time in hours, minutes, or seconds is privileged over measuring it in hours squared, or log hours. Thus, an agent’s position-measured satisfaction with some imputation X is an ordinal measure of the desirability to that agent of X (relative to the other available imputations). An agent is positionally worst-off under some imputation X iff her position-measured satisfaction with X is no greater than any other agent’s. Some outcome X is a Position Maximin Solution to some bargaining problem B iff X is an imputation in B, and the position-measured satisfactions of the positionally worst-off agents in B under X are no smaller than the position-measured satisfactions of the positionally worst-off agents in B under any other imputations.47Unlike the Nash Bargaining Solution, the Position Maximin Solution is defined over the set of possible outcomes rather than over the set of possible utility vectors. That is why the Position Maximin Solution can handle non-vNM preferences (Sakovics 2004). (If there exists more than one Position Maximin Solution for a price dispute in my economic model, then there will simply exist more than one equilibrium, and hence more than one appropriate action.)

The Position Maximin Solution can be understood as the result of an attractive ‘fallback’ procedure for finding a compromise. At the start of this procedure, each agent ‘reports’ the set of imputations S0 that she weakly prefers to every other imputation (in other words: her (joint) favourite imputation(s)). n% of the way through the time allotted for this fallback procedure, each agent reports the set Sn of the imputations that she weakly prefers to at least (100–n)% of all possible imputations. The procedure halts as soon as one or more imputations are being reported by all of the agents. That set of imputations is always identical to the set of Position Maximin Solutions.

§3.6 and 3.8 of this paper illustrate the Position Maximin Solution in cases where the space of imputations is continuously divisible. Here, I illustrate Position Maximin in a case where there are only three imputations. Suppose that there are three agents (A1, A2, and A3) and four possible outcomes (X, Y, Z, and D). The agents’ preferences are as follows, where ≻ denotes ‘is strictly preferred to’:

Table 2: Agents’ preferences

| A1 | X≻Y≻Z≻D |

| A2 | Z≻Y≻X≻D |

| A3 | Y≻X≻Z≻D |

In our bargaining problem, D is the disagreement point. Hence, there are three imputations, X, Y, and Z. Each agent’s position-measured satisfaction with each imputation is as follows:

Table 3: Position-measured satisfaction

| X | Y | Z | |

| A1 | 1 | 2/3 | 1/3 |

| A2 | 1/3 | 2/3 | 1 |

| A3 | 2/3 | 1 | 1/3 |

Hence, the position-relative satisfaction of the worst-off agent under X or Z is 1/3, whereas the position-relative satisfaction of the worst-off agent under Y is 2/3. Hence, Y is the unique Position Maximin Solution to this bargaining problem.

I also use this case to illustrate the ‘fallback’ compromise procedure that results in the Position Maximin Solution. In this procedure, for the first 1/3 of the time allotted, A1 reports the set {X}, A2 reports the set {Z}, and A3 reports the set {Y}. Then, after 1/3 of the time allotted for this procedure has passed, A1 reports {X,Y}, A2 reports {Z,Y}, and A3 reports {Y,X}. At this point the procedure halts, because Y is now being reported by every agent. Thus, Y is the unique Position Maximin Solution to this bargaining problem.

In this paper, I tentatively adopt Position Maximin as the solution concept in terms of which PRT is defined. However, I do not claim that Position Maximin is necessarily the best solution concept for this purpose. All I claim is that it represents one promising option.48For instance, many will regard Position Leximin as superior to Position Maximin. Fortunately, Position Leximin is extensionally equivalent to Position Maximin in all of the cases considered in this paper. Correspondingly, I do not claim that PRT defined in terms of Position Maximin is necessarily the best possible version of PRT. All I claim is that this version of PRT is superior to the alternative theories of appropriateness outlined in §2.2, and that it hence represents a significant theoretical step in the right direction.

3.6 Contracts

Distributional Jackson: Imagine that some decision maker has 100 units of a resource, each unit of which can be used to purchase a unit of either X, Y or Z. The decision maker has 50% credence in T1, and 50% credence in T2. If x, y and z are the total amounts spent on X, Y and Z respectively, then T1’s choiceworthiness function is 10x + 9y – 10z, and T2’s choiceworthiness function is –10x + 9y + 10z. In light of her moral uncertainty, what is it most appropriate for the decision maker to do with her 100 resource units? (Just suppose, for sake of simplicity, that this is the last choice situation that the decision maker will face in her lifetime, and that she knows this.49On the relevance of this assumption see §3.7 below. Likewise suppose, for sake of simplicity, that it is impossible for the decision maker to randomise how she will use her resources.)50Permitting random lotteries would complicate the PRT analysis but would not alter the key result.

As before, we begin by modelling T1 and T2 as economic agents, A1 and A2, whose respective preference structures over outcomes are simply T1 and T2’s choiceworthiness structures. A1 and A2 are each initially endowed with 50 units of the resource.

If A1 and A2 were to each spend their endowment on the good that they most prefer out of X, Y and Z, then A1 would purchase 50 units of X and A2 would purchase 50 units of Z. That would leave each theory-agent with a utility of (10 x 50) + (9 x 0) – (10 x 50) = 0. However, if A1 and A2 both spent their endowments on Y, then each of them would have a utility of 9 x 100 = 900. In order to achieve this Pareto improvement, A1 and A2 can enter into a contract, with each theory-agent promising the other to spend her endowment on Y rather than X or Z.

In fact, this contract is the unique Position Maximin Solution in this choice situation. Any outcome where x and z are both strictly positive is Pareto suboptimal, since it would be a Pareto improvement for ε ≤ x, z less to be spent on each of X and Z, with 2ε more being spent on Y. On the other hand, any outcome where either x or z is zero is clearly Pareto optimal. Hence, an outcome is Pareto optimal iff either x or z is zero. Such an outcome is individually rational iff whichever of x or z is nonzero is no greater than (approximately) 47.37. (If z = 0 , then choiceworthiness according to T2 is ≥ 0 iff x ≤ 47.37. Likewise, if x = 0, then choiceworthiness according to T1 is ≥ 0 iff z ≤ 47.37.) Hence, the set of imputations can be illustrated as follows:

Figure 5: A set of imputations

In Figure 5, an outcome sits on one of the two dashed lines iff it is an imputation. Out of any two imputations, T1 prefers the imputation that lies furthest to the right, whereas T2 prefers the imputation that lies furthest to the left. 50% of the imputations lie to the left of (x = 0, y = 100, z = 0), and 50% lie to the right. Hence, (x = 0, y = 100, z = 0) is weakly preferred to 50% of the imputations by both A1 and A2. Any point strictly to the right of (x = 0, y = 100, z = 0) is weakly preferred to less than 50% of the imputations by T2. Likewise, any point strictly to the left of (x = 0, y = 100, z = 0) is weakly preferred to less than 50% of the imputations by T1. Hence, (x = 0, y = 100, z = 0) is the unique Position Maximin Solution; it is uniquely appropriate for the decision maker to spend all of her resource units of Y. PRT has plausible implications in Distributional Jackson.

3.7: Future-oriented contracts

Theory-agents can also make contracts with each other governing future choice situations. In particular, under PRT, theory-agents will often use future-oriented contracts to maximise their influence over the choice situations that matter most to them.

Priorities: Imagine that at time t1 a decision maker has 100 units of some resource that she must spend immediately. She will also later receive (at time t2) another 100 units. At time t1, each resource unit can be used to purchase a unit of either D or E. At time t2, each resource unit can used to purchase a unit of either E or F. The decision maker has 50% credence in T1 and 50% credence in T2. Suppose for simplicity that she will continue to have these credences forever. If d, e, and f are the total amounts spent on D, E, and F respectively across both time periods, then T1’s choiceworthiness function is 10d + 2e + f, and T2’s choiceworthiness function is d + 2e + 10f. In light of her moral uncertainty, what is it most appropriate for the decision maker to do with her resources in each choice situation? (Just suppose, for sake of simplicity, that these are the last two choice situations that the decision maker will face in her lifetime, and that she knows this.51On the relevance of this assumption see n. 53 below. Likewise suppose, for sake of simplicity, that it is impossible for the decision maker to randomise how she will use her resources.)52Permitting random lotteries would complicate the PRT analysis but would not alter the key result.

As before, we begin by modelling T1 and T2 as economic agents, A1 and A2, whose respective preference structures over outcomes are simply T1 and T2’s choiceworthiness structures. A1 and A2 are each endowed with 50 resource units at t1, which they must spend immediately. Later on (at time t2) they will also each receive another 50 units of the resource.

If A1 and A2 were to each spend their initial endowment on the good that they most prefer out of D and E, then A1 would purchase 50 units of D, and A2 would purchase 50 units of E (recall that at t1, D and E are the only options). And then at t2, after receiving more of the resource, A1 would purchase 50 units of E, and A2 would purchase 50 units of F. That would leave each theory-agent with a utility of (10 x 50) + (2 x 100) + 50 = 750. However, if 100 units of the resource were spent on D at time t1, and 100 units spent on F at time t2, then each theory-agent would have a utility of (10 x 100) + 100 = 1,100. In order to achieve this Pareto improvement, A1 and A2 can enter into a contract, with A2 promising to purchase 50 units of D at time t1, and A1 promising to purchase 50 units of F at time t2. In fact, this contract is the unique Position Maximin Solution in this choice situation (the proof is omitted, since it is very similar to the one given for Distributional Jackson).53However, things become much more complicated if we relax the simplifying assumption that the decision maker knows she will not face any further choice situations in her lifetime. If the decision maker believes that she will face additional choice situations after the two described in Priorities, then the outcomes (and hence the imputations) under consideration will have to specify not only what happens in the two choice situations described in Priorities, but also what will happen in the choice situations that will come after them. Any application of PRT to a real world decision problem will have to take such complexities into account. For a response to the worry that this makes PRT implausibly complex, see §4.3 below.

This example illustrates that under PRT, moral theory-agents will often use future-oriented contracts to maximise their influence over the choice situations that matter most to them. In Priorities, A2 in some sense sells A1 the right to choose between D and E, in return for the right to choose between E and F. The latter decision matters more than the former for A2, and likewise mutatis mutandis for A1.

Suppose that the decision maker in Priorities does not act appropriately at time t1. Does this make a difference to what it is appropriate for the decision maker to do at time t2? I claim that it does not. Contracts between theory-agents concern how resources are used in the economic model of Priorities, which determines which actions are appropriate for the decision maker at times t1 and t2. If the decision maker does not purchase 100 units of D at t1, then she can be legitimately reproached for failing to act appropriately. In virtue of this fact, there is a sense in which A1 ‘gets what she paid for’ regardless of whether or not the decision maker acts appropriately at t1, insofar as A1 gets to determine what is appropriate. Regardless of what the decision maker actually purchases at time t1, it is still most appropriate for her to purchase 100 units of F at t2.

3.8: Uncertainty about the future

In many real-world cases, the decision maker is uncertain about which choice situations she will face in the future. I stipulate that in such cases, each theory-agent has the profile of credences over possible futures of the world that is best warranted by the decision maker’s present evidence. Theory-agents will then be able to sign conditionalized contracts with each other, of the form: ‘I agree to give you q units of resource Q now, in return for you agreeing that if a choice situation of type S occurs in the future, then in that situation you will give me r units of resource R.’ A contract of this sort is just a kind of risky outcome, and hence is evaluable by each theory-agent for choiceworthiness in much the same way as any other outcome is so-evaluable. As always, each theory-agent will trade and make contracts so as to maximise choiceworthiness.

Some theory-agents might find it optimal to enter into some rather interesting contracts with each other.

Risk: Imagine that at time t1, a decision maker has 100 units of some resource that she must spend immediately. Her evidence also suggests there is a 20% chance of her later receiving (at time t2) another 100 units; otherwise, she will receive nothing. At time t1, each resource unit must be used to purchase a unit of either G or H. At time t2, each resource unit must be used to purchase a unit of either I or J. The decision maker has 50% credence in T1, and 50% credence in T2, both of which have vNM choiceworthiness functions. Suppose for simplicity that the decision maker will continue to have these credences forever. If g, h, i and j are the total amounts spent of G, H, I and J respectively, then T1’s choiceworthiness function is 2g + h + i – 16j, and T2’s choiceworthiness function is g + 2h – 6i + j. In light of their uncertainty about t2, which contract should the theory-agents A1 and A2 (representing T1 and T2 respectively) make with each other, if any? (As before, suppose for sake of simplicity that the decision maker will face at most these two choice situations in the remainder of her lifetime, and that she knows this.54On the relevance of this assumption see n. 53 above. Likewise suppose, for sake of simplicity, that it is impossible for the decision maker to randomise how she will use her resources.)55Permitting random lotteries would complicate the PRT analysis but would not alter the key result.

If A1 and A2 were to each spend their initial endowment on the good that they most prefer out of G and H, then A1 would purchase 50 units of G, and A2 would purchase 50 units of H. And if they received more of the resource at t2, A1 would purchase 50 units of I, and A2 would purchase 50 units of J. That would leave A1 and A2 with ex ante utilities (since their utility functions are vNM) of 2 x 50 + 50 + 0.2 x (50 – 16 x 50) = 0 and 50 + 2 x 50 + 0.2 x (-6 x 50 + 50) = 100 respectively. However, if A1 agreed to purchase 50 units of H at t1 in return for A2 promising to purchase 25.21 units of I at t2, then A1 and A2 would have ex ante utilities of 100 + 0.2 x (75.21 – 16 x 24.79) = 35.71 and 2 x 100 + 0.2 x (-6 x 75.21 + 24.79) = 114.71 respectively.

In fact, this is the unique Position Maximin Solution in this choice situation. If A1 and A2 together agree to purchase g* units of G and h* units of H at t1, plus i* units of I and j* units of J if the decision maker receives 100 resource units at t2, then A1 and A2’s ex ante utilities are, respectively u1 = 2g* + h* + 0.2i* – 3.2j* and u2 = g* + 2h* – 1.2i* + 0.2j*.

Since g* = 100 – h* and j* = 100 – i*, we can simplify these ex ante utilities as follows:

u1 = 200 – 2h* + h* + 0.2i* – 320 + 3.2i*

= -120 – h* + 3.4i*

u2 = 100 – h* + 2h* – 1.2i* + 20 – 0.2i*

= 120 + h* – 1.4i*

Figure 6: Utility functions in an ‘Edgeworth box’

Each dashed diagonal line in Figure 6 is a set of points that all give A1 the same utility. Likewise, each undashed diagonal line is a set of points that all give A2 the same utility. A1 strictly prefers any points below and/or to the right of any dashed line over any of the points on that particular line, and strictly disprefers any points above and/or to the left. Likewise, A2 strictly prefers any points above and/or to the left of any undashed diagonal line over any of the points on that particular line, and strictly disprefers any points below and/or to the right. Hence, Figure 7 illustrates the set of points that are individually rational (shaded in grey).

Figure 7: Indifference lines in an ‘Edgeworth box’

Figure 8 illustrates the set of points strictly preferred to some arbitrarily chosen individually rational outcome where h* < 100.

Figure 8: Indifference lines in an ‘Edgeworth box’

Since this region is non-empty, no such point is Pareto optimal. By contrast, any outcome where h* = 100 is Pareto optimal, as Figure 9 illustrates.

Figure 9: Indifference lines in an ‘Edgeworth box’

Hence, an outcome is an imputation iff h* = 100 and 64.71 ≤ i* ≤ 85.71. A1 prefers for i* to be as large as possible, whereas A2 prefers for i* to be as small as possible. Hence, h* = 100 and i* = (64.71 + 85.71)/2 = 75.21 (midway between 64.71 and 85.71) is the unique Position Maximin Solution. At t1, it is uniquely appropriate for the decision maker to purchase 100 units of H. A2 is in some sense insuring A1 against the possibility of the decision maker receiving another 100 resource units at t2. What A1 stands to lose should A2 receive 50 units at t2 without having signed a contract is greater than what A2 stands to lose should A1 receive 50 units at t2 without having signed a contract.

3.9 Evidence and credence change over time

Suppose that at time t2 in Risk, the decision maker does receive 100 resource units. What should PRT say is the most appropriate way to use these resources? According to the Obvious Response, the most appropriate way to use these resources is the way specified by the contracts that all of the theory-agents agreed to at t1. In other words: it is most appropriate for the decision maker to purchase 75.21 units of I and 24.79 units of J.

By contrast, according to the Nonobvious Response, the most appropriate way to use the 100 resource units at t2 is the way specified by the contracts that all of the theory-agents would have agreed to at t1, had the decision maker known at t1 what she knows now (at t2). The decision maker’s evidence at t1 suggested that she had a 20% chance of receiving 100 resource units at t2. At t2, however, the decision maker has acquired new evidence, because at t2 she actually has received 100 resource units. Had the decision maker known with certainty at t1 that she would receive 100 resource units at t2,56For sake of argument I assume here that the decision maker could in principle have known this at t1 (if only she had had sufficient evidence and powers of reasoning). then A2 would not have agreed at t1 to the contract described in §3.8 above. Under these evidential conditions, A1 and A2’s ex ante utilities at t1 would have been, respectively:

u1 = 2g* + h* + i* – 16j*

= -1400 – h* + 17i*

u2 = g* + 2h* – 6i* + j*

= 200 + h* – 7i*

Figure 10: Utility functions in an ‘Edgeworth box’

As Figure 10 suggests, the unique Position Maximin Solution under the Nonobvious Response is h* = 100 and i* = 55.04. Hence, according to the Nonobvious Response, it is most appropriate for the decision maker to purchase 55.04 units of I, and 44.96 units of J.

The Nonobvious Response is superior to the Obvious Response because it is inappropriate for T1 to benefit at t2 from the fact that the decision maker had incomplete evidence at t1. In other words: it is inappropriate for the decision maker to be governed by the dead hand of her past evidence. The Nonobvious Response is consistent with these intuitively plausible claims, but the Obvious Response is not. For that reason, the Nonobvious Response is superior.

A related question concerns credences over moral theories. Until now, I have been assuming that the decision maker’s credences over moral theories remain constant over time. But what should happen in cases where these credences change over time?

Suppose, for instance, that A1 agrees to give A2 some of her resources at time t1 in return for A2 giving A1 some of her resources at time t2. What should happen if between t1 and t2 the decision maker transfers much of her credence in T1 to some alternative theory T3? It is most plausible to suppose that this credence shift should diminish A1’s contractual rights against A2 at time t2. If a decision maker has come to substantially repudiate T1, then A1 should no longer have extensive influence in determining how it is most appropriate for the decision maker to behave. It is inappropriate for the decision maker to be governed by the dead hand of her past credences over moral theories.

I now show how to honour these intuitions. To determine what is appropriate in some choice situation χn, one should construct an economic model of the whole sequence of choice situations (χ1, …, χn) that the decision maker has faced in her lifetime up to and including χn, where in the model of each choice situation χi:

(i) Each theory-agent Aj is endowed with a share of the decision maker’s resources in χi proportional to the decision maker’s credence at the time of χn in the corresponding moral theory Tj.

(ii) Each theory-agent’s body of empirical evidence is the one that she has at the time of χn.

(iii) As before:

a. Each theory-agent can spend her resources in any of the ways open to the decision maker in χi.

b. Theory-agents can also make contracts with each other, including contracts governing future choice situations (χi+1, χi+2, etc.).

(iv) Finally, the theory-agents believe that any contracts governing choice situations after χn (χn+1, χn+2, etc.) will be rigorously enforced.

An action is appropriate in xn iff it is the aggregate of all of the actions performed in χn by the theory-agents in some equilibrium of the economic model of (χ1, …, χn) . In other words: what is actually most appropriate in the current choice situation given one’s current credences and evidence is identical to what would be most appropriate in the current choice situation if one had always had those credences and that evidence.57Note that (iv) is not in tension with the Nonobvious Response. (iv) is a feature of the model that determines what is most appropriate in χn. Hence, this model ‘goes out of date with’ χn. For instance, appropriateness in χn+1 is determined by an entirely new model – which itself conforms to (i)-(iv), except with the instances of ‘n’ replaced by instances of ‘n+1′.

3.10 Choices between discrete options

In introducing PRT, I have been considering cases where the decision maker has to choose how to use an endowment of some continuously divisible resource. By contrast, the existing philosophical literature on moral uncertainty (cf. §2.1 above) is dominated by cases where a decision maker has to choose between two or more discrete options (such as killing the fat man versus letting five people die in ‘bridge’ versions of the trolley problem).

The natural way to extend PRT so as to cover these discrete cases is to stipulate that before each choice situation, each entertained theory-agent A is initially endowed with some c(A)-length segment of the interval [0,1], where c(A) denotes the decision maker’s credence in the moral theory corresponding to A. That segment of [0,1] is like a lottery ticket. A number from [0,1] will be selected randomly, and whichever theory-agent ‘owns’ the segment of [0,1] to which the randomly chosen number belongs will be given the right to perform any one of the actions open to the decision maker in this choice situation – call this the Lottery Rule.58Cf. Greaves and Cotton-Barratt 2019, §3.1. Before the random number is generated, theory-agents can trade their segments of [0,1] between each other, and make contracts. The possibility of contract making means that the equilibrium outcome will sometimes be fully determined even before the random number is selected, either because one theory-agent comes to own every portion of [0,1], or because the theory-agents all promise each other to perform a certain action regardless of which random number is selected. The latter is what will happen, for instance, in many Jackson cases.

In cases where the decision maker is endowed with n indivisible units of some resource, each indivisible unit of the resource should be distributed by its own application of the Lottery Rule – call this iterated application of the Lottery Rule.59By contrast, Greaves and Cotton-Barratt’s lottery proposal (2019, §3.1) would distribute all n units through a single winner-takes-all lottery. As some fixed-size resource parcel becomes more divisible, the number of indivisible units n increases. And as n increases, the probability distribution induced by iterated application of the Lottery Rule tends towards each theory-agent A receiving c(A) of the resource parcel with certainty. This is a desirable result: it shows that the Lottery Rule is entirely consistent with dividing continuously divisible resources between theory-agents in proportion to the decision maker’s credences in the corresponding theories.

4. Evaluating the Property Rights Theory

4.1 Advantages

PRT has several advantages. Firstly, it vindicates Proportionality in Distribution. Secondly, it has no more trouble handling cases where some theories’ choiceworthiness functions are ordinal and non-vNM than it has handling cases where all theories’ choiceworthiness functions are cardinal and/or vNM.60By contrast, NBT is only applicable in cases where all entertained moral theories have vNM choiceworthiness functions (see §2.3 above). Vis-à-vis MEC on this score, cf. n. 19 above. Thirdly, through the mechanism of future-oriented contracts, PRT allows moral theories to have greatest influence (in determining appropriateness) in the particular choice situations that matter most to them.61By contrast, MacAskill, Bykvist and Ord’s preferred extension of MEC to handle cases of intertheoretic unit-incomparability (cf. n. 19 above) does not always give moral theories greater influence over the choice situations that matter more to them ([redacted]).

4.2 Moral information

One attractive feature of MEC (a popular rival to PRT) is that it supplies us with an account of the value of moral information.62MacAskill, Bykvist and Ord 2020, chapter 9. Imagine that some decision maker knows that she will only face two choice situations in her lifetime. At time t1 she has to choose whether to pay $w for an infallible oracle to tell her which moral theory is true. After that, at time t2, she will face Distributional Jackson. At the present moment t0 the decision maker has 50% credence in T1 and 50% credence in T2. According to both T1 and T2, spending $w is equivalent to sacrificing w units of choiceworthiness. T1 and T2‘s choiceworthiness functions are intertheoretically unit-comparable.

If the decision maker does not consult the oracle at t1, then purchasing 100 units of Y at t2 will maximise expected choiceworthiness at 900. If the decision maker does consult the oracle at t1, then following the plan:

- if the oracle endorses T1 then purchase 100 units of X,

- but if the oracle endorses T2 then purchase 100 units of Z

at t2 will maximise choiceworthiness at (1000 – w) in each of the two epistemically possible cases. Hence, consulting the oracle will uniquely maximise expected choiceworthiness iff w < 100. According to MEC, at any price less than $100 it is uniquely appropriate to consult the oracle. Such is the value of moral information.

What does PRT imply in this case? Well, if the decision maker does not consult the oracle at t1, then A1 and A2 both know that they will contract with each other at t2 to purchase 100 units of Y (see §3.6 above). In that case, A1 and A2 will each have a utility of 900. Now suppose that the decision maker decides to consult the oracle at t1. Recall that A1 is certain that T1 is true, and that A2 is certain that T2 is true. Hence, before t1 each theory-agent is certain that her preferred theory will be endorsed by the oracle. If T1 is endorsed by the oracle, then the decision maker will have 100% credence in T1 at t2. Therefore, A1 will receive all 100 resource units, and will spend them all on X, giving A1 a utility of 1000 – w. Likewise, if T2 is endorsed by the oracle, then the decision maker will have 100% credence in T2 at t2. Therefore, A2 will receive all 100 resource units, and will spend them all on Z, giving A2 a utility of 1000 – w. Hence, each theory-agent strictly prefers the decision maker to consult the oracle iff w < 100. According to PRT, at any price less than $100 it is uniquely appropriate to consult the oracle. Just like MEC, PRT supplies us with an account of the value of moral information.

4.3 Complexity